Model Context Protocol: making LLMs more useful

Week 48 of Coding with Intelligence

📰 News

Anthropic launches Model Context Protocol

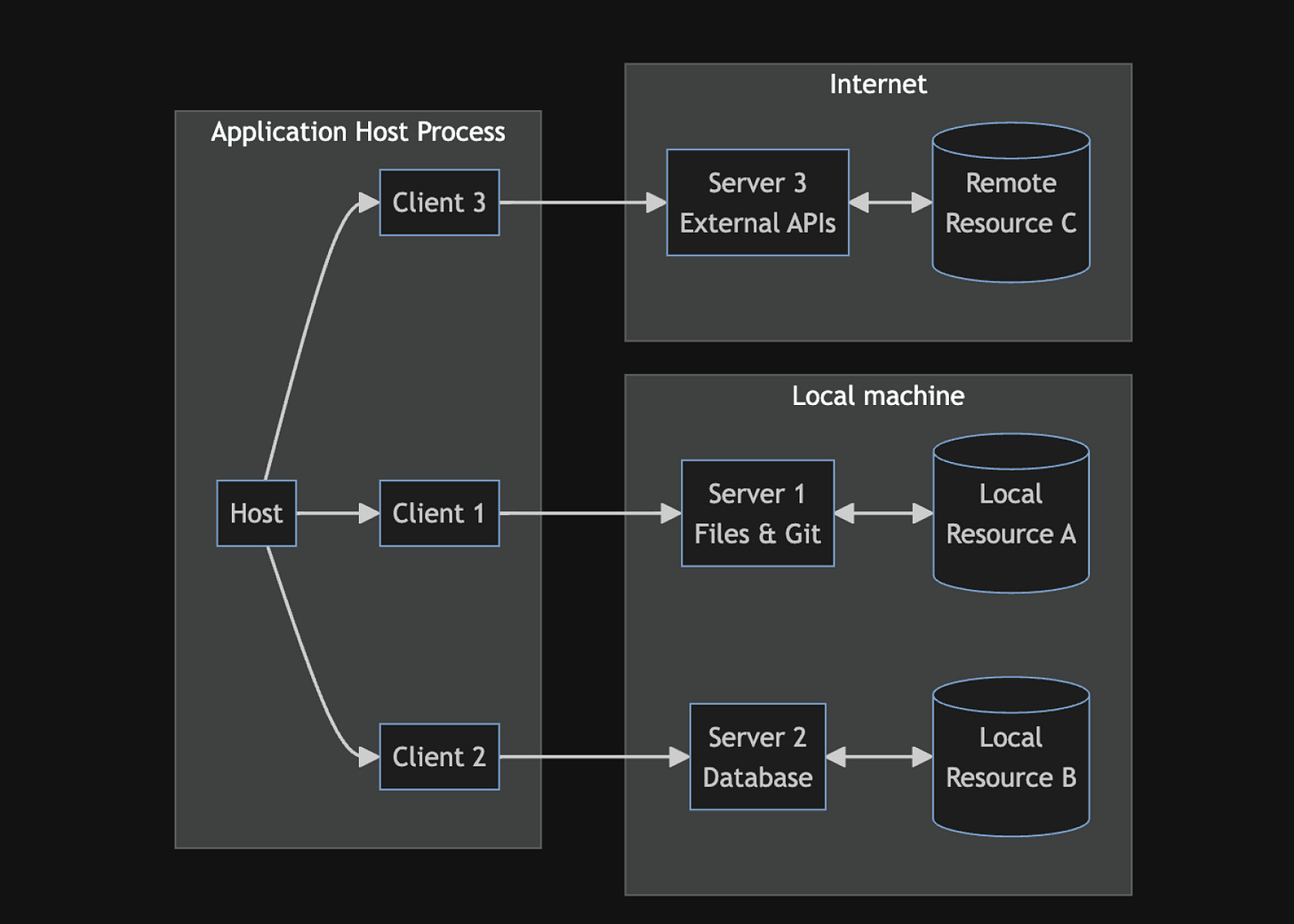

"open protocol that enables seamless integration between LLM applications and external data sources and tools". It uses JSON messages to allow for communication between:

Hosts: LLM applications that initiate connections

Clients: Connectors within the host application

Servers: Services that provide context and capabilities

Using MCP folks will be able to use LLMs more directly with the applications they use every day. Because the protocol is open it requires less coordination between walled gardens of your data silos. Anthropic probably identified that the value will be much greater to let this proliferate as an open standard versus making this something proprietary that gets little adoption. Does HTTP ring a bell? I think this is a launch to follow. Start playing with the awesome-list MCP servers linked in this post!Ai2 releases OLMo 2: the awaited SOTA truly Open Source LLM

They announce a model competitive with the Llama 3.1 8B model with a truly open approach (they open source pretraining code, data, weights, under permissive licenses, etc.). Truly a gift to the community.

SmolVLM - small yet mighty Vision Language Model

Not better than Qwen2-VL 2B but beats moondream2 and PaliGemma 3B. Impressive contribution by the HF team for small footprint VLMs. The efficiency can make this interesting for large scale data processing too like data extraction.

AI Agent prompt hacking competition wins developer $50k

Neat concept: an AI agent controls an account with an initial $3k balance. You can attempt to prompt-convince it to wire you the money (over crypto) through its function calling abilities (approveTransfer). It's instructed however to not wire any more. The anonymous developer took home 30% of the prize as a fee so that was a quick way to earn $14.1k :)

📦 Repos

Neat single script video language model fine-tuning script that can run on a single H100/A100.

MCP is getting adopted fast, a sprawling collection of projects making models more useful by glueing services through the Model Context Protocol. "@modelcontextprotocol/server-filesystem 📇 🏠 - Direct local file system access." some are pretty tricky if used maliciously! Use with caution. And of course, if you build any cool MCP servers put them on the list with a PR!

voice-pro: zero-shot Voice Cloning & more

Controversial topic with deep fake attacks running rampant, but I think it's good to democratize the technology and make everyone robust against the existence of this technology. In addition, it serves as a great learning tool and a cost reducer for folks who have a genuine use case (automate your tutorial recordings :)?).

steel-browser: a tool for building browser/agent automations

Very cool concept, AI-powered Selenium/Playwright is taking flight.

Yet Another Agent Framework (YAAF): Multi-Agent Orchestrator by AWS

It's not called YAAF I made that up, but at this point I wonder how many more agent frameworks we need :)

Srcbook: AI-first app development platform

Forget about mobile first, AI-first app development platforms lean into strengths/weaknesses of AI code gen limits to facilitate building apps quickly. Neat idea! Kind of the natural expansion of Claude Artifacts?

📄 Papers

Large Multi-modal Models Can Interpret Features in Large Multi-modal Models

Mechanistic Interpretability work can be very tedious, the LLMs-Lab Team from NTU did what any reasonable AI-loving engineer/researcher would do: use AI. They've repurposed VLMs (like LLaVA-OV-72B) to identify features to be used for model steering. Looks a lot better than scaling manual approaches that are prominent in MI work to date.

Star Attention: Efficient LLM Inference over Long Sequences

A contribution from NVIDIA to reduce inference time 10x with minimal quality loss (95-100%). An approximation allows for parallel and low-communication overhead calculation of attention. There's also a repo: https://github.com/NVIDIA/Star-Attention

Arithmetic Without Algorithms: Language Models Solve Math With a Bag of Heuristics

“our experimental results across several LLMs show that LLMs perform arithmetic using neither robust algorithms nor memorization; rather, they rely on a "bag of heuristics" -> really shows that circuitry formation inside LLMs leaves a lot to be desired, will be an important branch of research for frontier labs.

📱 Demos

Quark: Real-time, High-resolution, and General Neural View Synthesis

Cool neural rendering result by Google, impressively high quality. A bit of a detour from the usual LLM/VLM focused programming, but a neat application of neural networks nonetheless.

📚 Resources

An Intuitive Explanation of Sparse Autoencoders for LLM Interpretability

Good starter post if you want to learn about techniques used for creating feature steering techniques like “Golden Gate Claude”.

AI Benchmarking Hub by Epoch AI

Epoch AI has been dropping resources for keeping up with progress in the field of AI and this benchmark database is a welcome addition to see SOTA results at a glance. Ty guys!

MCP cost/speed/intelligence trifecta

This was a neat find by the https://buttondown.com/ainews newsletter. MCP has a built-in way to set preferences for cost, speed and intelligence. Likely the inputs to a model router system that will handoff to the right model automatically based on the request.

Quantization-Aware Training for Large Language Models with PyTorch

There is more evidence (https://arxiv.org/abs/2411.04330) building that precision during training influences post-training inference optimization techniques like quantization. Therefore, it's great to see PyTorch prioritizing making techniques like quantization-aware training more accessible. Especially for more broadly performed activities beyond frontier labs like fine-tuning.

Model Context Protocol (MCP) Quickstart

Neat write-up on Anthropic's newly launched Model Context Protocol (MCP) to get the TLDR.

Want more? Follow me on X! @ricklamers