Molmo 72B: the VLM you shouldn't sleep on

Week 39 of Coding with Intelligence

📰 News

Molmo: open source 72B multimodal model by AllenAI

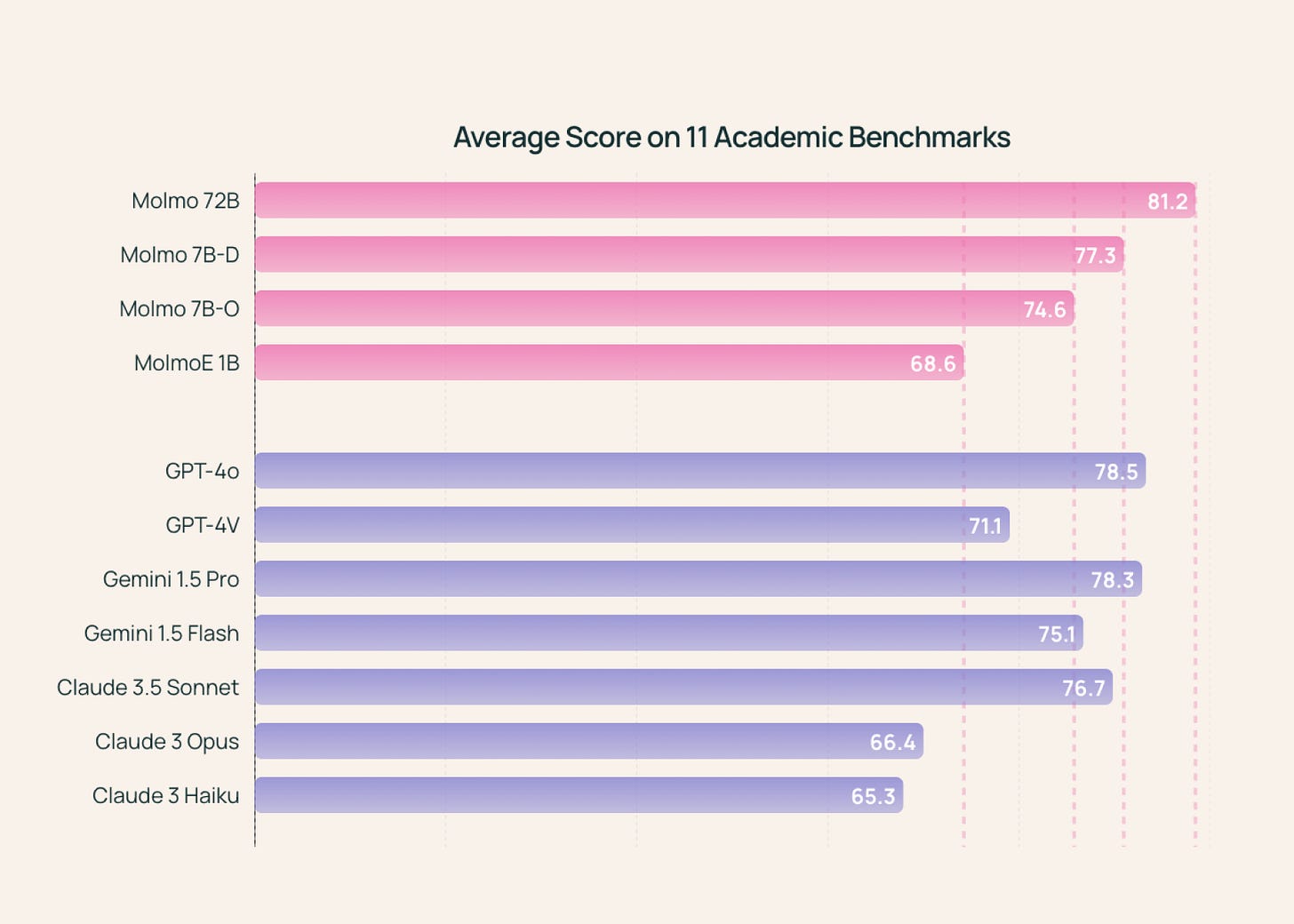

AllenAI released Molmo, a family of open-source multimodal AI models including MolmoE-1B (1B active/7B total parameters), Molmo-7B-O, Molmo-7B-D, and Molmo-72B, trained on a novel high-quality dataset called PixMo using CLIP ViT-L/14 336px as the vision encoder and various language models as backbones, achieving SOTA beating even GPT-4o in multiple benchmarks.

Llama 3.2: 90B, 11B VLM and 3B, 1B LLM for embedded

90B outperforms gpt4o-mini so getting very close to SOTA performance. What an incredible gift to the community by Meta 👏 Interestingly, they also release a voice model for their apps but don't open source that.

📦 Repos

Generalized Knowledge Distillation (GKD) lands in TRL lib

From the paper https://arxiv.org/abs/2306.13649

Promptriever: Instruction-Trained Retrievers Can Be Prompted Like Language Models

Code for https://arxiv.org/abs/2409.11136

CritiPrefill: significantly faster prefill for 1M context Llama 3 8B

Code for paper https://arxiv.org/abs/2409.12490

DALDA: Data Augmentation Leveraging Diffusion Model and LLM with Adaptive Guidance Scaling

Useful for building finetuning datasets for VLMs.

📄 Papers

LLMs Still Can't Plan; Can LRMs? A Preliminary Evaluation of OpenAI's o1 on PlanBench

Spoiler alert: Reasoning Language Models do a lot better than non-o1/🍓 style models.

V-STaR: Training Verifiers for Self-Taught Reasoners

"Running V-STaR for multiple iterations results in progressively better reasoners and verifiers, delivering a 4% to 17% test accuracy improvement over existing self-improvement and verification approaches on common code generation and math reasoning benchmarks with LLaMA2 models."

Rethinking Conventional Wisdom in Machine Learning: From Generalization to Scaling

📚 Resources

How to Optimize a CUDA Matmul Kernel for cuBLAS-like Performance: a Worklog

This resource on CUDA was approved by Andrej Karpathy

Scaling ColPali to billions of PDFs with Vespa

Retrieval goes multimodal

Labelbox follows Scale AI and publishes a private leaderboard

Neat short tutorial for the, in my opinion, canonical retrieval stack in 2024.

Want more? Follow me on X! @ricklamers