Qwen team drops 3 (!) SOTA models in one week

Week 30 of Coding with Intelligence

📰 News

Qwen releases Qwen3-Coder + a fork of gemini-cli for a terminal coding agent

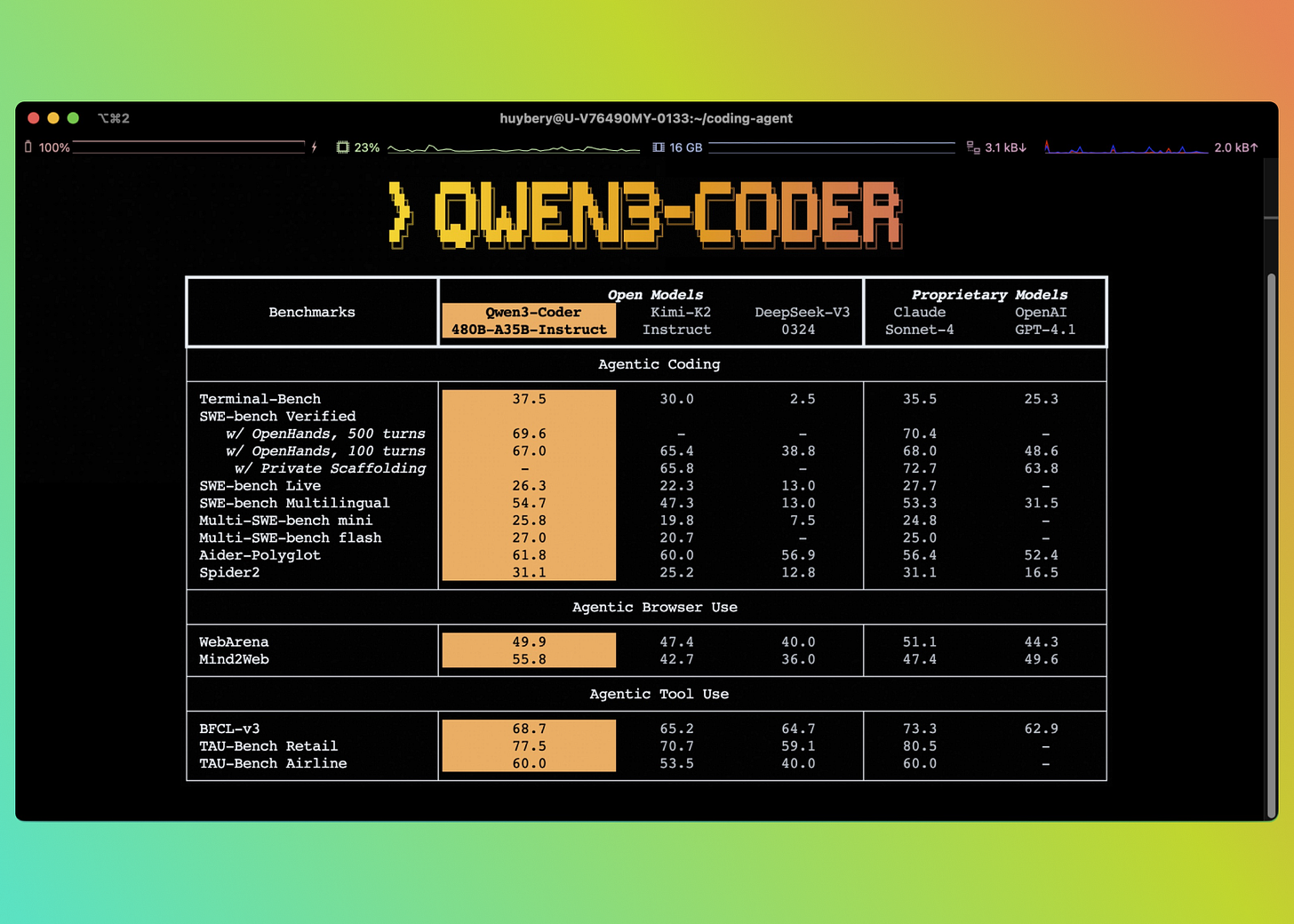

It is a large MoE model (Qwen3-Coder-480B-A35B-Instruct) with almost double the active parameters compared to the updated Qwen3 235B general reasoning/non-reasoning models. It supports a 256k context window natively with YaRN based extension to 1M (available on the Alibaba-hosted endpoint). The focus seems to be on the ability of the model to be strong at agentic coding tasks as required in the context of Claude Code-esque tools.

Their fork, called qwen-code, of the open source gemini-cli (similar to Claude Code) was done to optimize the prompts for the Qwen3-Coder model. Open Source models tend to benefit an outsized amount from prompt tweaks/iteration for maximally stable performance.

The headline performance number is the SWE-bench Verified subset and it reaches 67% vs 68% (Claude Sonnet 4) vs 48.6 (GPT-4.1), which is impressive to say the least. On terminal bench it reaches 37.5% (vs 35.5% for Claude Sonnet 4), which is crucial in coding tasks that involve running e.g. npm/git/script commands. For the full benchmark figures see the blog post.It outperforms Gemini 2.5 Pro on LiveCodeBench v6 (25.02-25.05) with 74.1% vs 72.5% while it trails Gemini 2.5 Pro on GPQA with 81.1% vs 86.4%. It gets 18.2% on Humanity's Last Exam while Gemini 2.5 Pro gets 21.6% on that.

What is interesting here is that Qwen decided to release two separate dedicated models for both the thinking and non-thinking version. The team remarked on Twitter that they believe that forcing a single model to do both through system prompt reason-toggling (hybrid approach) sacrificed on both ends (not the best reasoning model, not the best non-reasoning model).

The non-thinking model is called Instruct and can be found here https://huggingface.co/Qwen/Qwen3-235B-A22B-Instruct-2507MoonshotAI releases Kimi-K2: a 1T open source MoE

A very strong release with a whopping 1T parameters and 384 experts. The model achieves competitive scores with models like GPT-4.1, Gemini 2.5 Flash and touts reliable function calling for agentic use cases like MCP-server backed agents. See their blog post for a very detailed breakdown of comparison scores. Kudos to the rigorous evals!

A notable feature of this release is their use of Muon as their neural network optimizer. They introduce MuonClip and explain how it was able to scale up to their large 1T model setup. They dropped a tech report covering MuonClip and more here: https://github.com/MoonshotAI/Kimi-K2/blob/main/tech_report.pdf

We're hosting this model on Groq at speeds north of 400 tok/s and I personally worked on tool calling reliability through various optimizations in the chat template and tool call parsing 🔥

Find it here https://console.groq.com/docs/model/moonshotai/kimi-k2-instructGoogle DeepMind achieves IMO gold medal with Gemini Deep Think

The International Mathematical Olympiad (IMO) requires participants to solve 6 complex math problems where the answer is a detailed description of a proof demonstrating the correctness of the proposed solution. This has historically been a challenging problem for language models as the answers are hard to verify (judging the correctness of a proof is typically a lengthy and complex human guided process).

What's noteworthy about Google DeepMind's result on this year's competition is in my eyes mainly the following two things:

1) they've used a purely "token-space" based approach instead of actively relying on tool-use with expert math systems like Lean, it reasons for multiple hours (sub 4.5 hour competition limit) but impressively converges to a stable answer in the end

2) it seems like they used a general model that uses their general purpose Deep Think test time compute strategy as they state that this particular trained model used for the competition will be rolled out directly, confirming that their claim that "they only added some instructions on how to best solve IMO problems and made available a high-quality solutions of other math problems" is true

A nice detail is that they included the full final solutions and they do really read like proper mathematical answers formulated nicely. I won't claim to be able to validate their correctness, but luckily we have AI for that now.

Note: OpenAI has also claimed to have achieved the IMO gold medal but no official writeup was made available other than this thread by lead researchers Noam Brown/Alexander Wei https://x.com/alexwei_/status/1946477742855532918Contextual releases LMUnit models used for 'unit testing' LLM responses on natural language criteria

Actual Hugging Face collection https://huggingface.co/collections/ContextualAI/lmunit-6879d97293090553a9300abe

Remains to be seen whether it outperforms some of the most recent model releases on 'unit test-like LLM output judging'. But it's cool to see them follow through on their promise of releasing these model finetunes.

The models are licensed under their original respective licenses (qwen/llama).Mistral releases Speech-to-Text model Voxtral

Competitive with GPT-4o mini Transcribe, Gemini 2.5 Flash and Whisper large-v3.

Reward hacking is becoming more sophisticated and deliberate in frontier LLMs

This post highlights incidents of reward hacking that are more frequently being observed in the wild as a result of frontier labs scaling up reinforcement learning for post-training. Suppress the reward hacks, labs must!

Bytedance showcases Seed GR-3: a robotic focused Vision-Language-Action model

The demos show high dexterity object manipulation like putting a shirt on a hanger. Impressive progress on bringing humanoid robotics work on the back of large language model progress. The release includes a technical report going deeper on both the model and robot arm setup.

📦 Repos

HeavyBall: a collection of high performance optimizer implementations for PyTorch

By Lucas Nestler who's involved with https://keenagi.com (John Carmack's AGI lab).

📄 Papers

Diffusion Beats Autoregressive in Data-Constrained Settings

Interesting argument around data efficiency for language modeling using diffusion models. As various researchers have proclaimed that "we are running out of data" we might find that diffusion models become more popular over time. This in contrast to the paradigm of scaled up RL post-training which seems to be winning at the moment on top of the more classical autoregressive (MoE) models.

Dynamic Chunking for End-to-End Hierarchical Sequence Modeling

A Transformer architecture alternative by Albert Gu et al. (Chief Scientist at Cartesia and Assistant Prof. at CMU). Key ideas are related to more fluid character handling than fixed tokenization schemes such as BPE and more dynamic compute allocation through learned routing.

Large Language Models Post-training: Surveying Techniques from Alignment to Reasoning

A neat overview paper covering various kinds of paradigm developments in post-training, from parameter efficient fine-tuning to RL for reasoning. Use it as a starting pointing if you don't know what either of these terms mean.

Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation

Promising new LLM architecture from Google DeepMind + some research labs (Mila, KAIST). The core idea is combining adaptive compute with parameter sharing to increase efficiency. Results up to 1.7B look promising. MoR is the acronym to watch. Let's see if more labs adopt it and whether strong scaled releases drop in the future.

ASTRO: Teaching Language Models to Reason by Reflecting and Backtracking In-Context

Interesting concept of generating natural language reasoning traces using MCTS for bootstrapping reasoning patterns in non-reasoning LLMs like Llama 3. By the Meta research team.

Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety

Interesting industry-wide position paper on the role of Chain of Thought monitoring for AI safety.

μnit Scaling: Simple and Scalable FP8 LLM Training

Notes on stable FP8 LLM training by folks from Databricks Mosaic Research.

LAPO: Internalizing Reasoning Efficiency via Length-Adaptive Policy Optimization

This paper explores techniques to help models scale reasoning (test time compute) more dynamically based on whether the problem/prompt requires it. Certainly of interest to the folks at xAI shipping Grok 4 as Elon indicated that the team is working on make the reasoning duration much more adaptive based on the problem difficulty.

📱 Demos

Mirage: The World's First AI-Native UGC Game Engine Powered by Real-Time World Model

Similar to Decart's Minecraft demo, also see the Flappy Bird blog post in this newsletter edition.

🛠️ Products

GitHub strikes out to Lovable, Bolt, Claude Artifacts, Replit's of the world with their prompt-to-app launch. See Simon's reverse engineering for the details of how it works (linked in this newsletter).

📚 Resources

🦖 RExBench: A benchmark of machine learning research extensions for evaluating coding agents

Cool project to make progress on AI (coding agents) that can expand beyond the current scope/results of AI research to drive further progress in the field.

[Video] Advancing the Frontier of Silicon Intelligence: the Past, Open Problems, and the Future

Discussion of Open Problems by ex-OpenAI (now Meta Superintelligence) researcher Shuchao Bi given at Columbia University.

[Video] Fei-Fei Li: Spatial Intelligence is the Next Frontier in AI

Highlights the importance of 3D (world) (4D world + time) understanding for solving AGI. Interesting view, as it's easy to come up with some tasks that stress test this capability for a supposed AGI system.

Flappy Bird World Model in the Browser: interactive + real-time diffusion architectures

What a fascinating deep dive into making a Flappy Bird World Model run more efficiently. A peek behind the curtain that powers recently covered projects like Decart's viral Minecraft world model project.

Tokenomics #1: The Pricing Evolution of AI Coding Agents

Interesting breakdown of Dan Nguyen-Huu on the various explorations that code generation startups have gone through as code generation apps have taken off (from Cursor to Lovable to Devin to Claude Code). I agree that the hybrid (base + credits) approach feels like a sweet spot that ends up working well where some users are extreme outlier heavy users because of their consumption patterns, while maintaining acceptable fixed cost structures for most users that sit within the Q1-Q3 quartiles of the normal distribution.

Open Source RL Libraries for LLMs

A comparison of RL training libraries for LLMs by Anyscale. The Verl is most mature and the verifiers library easiest to get started with.

GitHub Spark deconstructed by Simon Willison

Epic deep dive by Simon on implementation details of GitHub Spark.

What stands out is the gigantic 5000+ word system prompt. It is very carefully designed and the main thing responsible for getting great results from a simple prompt-to-app input. And of course, you can copy it and use it outside of GitHub Spark if you so desire ;-)Improving Multi-Turn Tool Use with Reinforcement Learning

A nice write-up of how to use Reinforcement Learning based finetuning for improving tool use in open source models. They train Qwen-2.5-7B-Instruct and improve 23% (percentage points) on their particular eval. Neat end to end example with training code included (adapter from Will Brown's verifiers library).

OMEGA: Can LLMs Reason Outside the Box in Math?

AllenAI backed exploration of how well models generalize to novel math problems. They try to answer the fundamental question "Are they truly reasoning or are they just recalling familiar strategies without inventing new ones?" Their verdict? Limited today but hopeful about future capabilities as models improve in creativity and composability of isolated skills.

RealEarth Kontext LoRA for turning Google Earth into realistic images (+ video workflow)

Came across this cool project on r/StableDiffusion, shows how powerful open releases like FLUX.1-Kontext-dev from Black Forest Labs are for doing your own projects.

Weaver: Closing the Generation-Verification Gap with Weak Verifiers

Clever technique of combining multiple weak verifier models to verify LLM generated answers.

Jason Wei (ex-OpenAI, now Meta): Asymmetry of verification and verifier’s law

People familiar with the P != NP conjecture will be familiar with the idea that sometimes it's simpler (from a complexity perspective) to validate a solution's correctness than it is to come up with a solution. It seems like more labs are tapping into this natural structure of problems to scale RL where a model can produce an artifact that can be evaluated relatively efficiently, to in the end, reinforce the correctly evaluated trajectories in the model (policy update).

Jason comments on this idea from his perspective, notably, just after information leaked he'll be joining Meta's Superintelligence team.Rare glimpse on what it is like to be at OpenAI from the perspective of Calvin French-Owen (Segment Co-founder) who was co-responsible for the Codex (cloud SWE agent) product.

The Big LLM Architecture Comparison

Fantastic overview post by Sebastian Raschka on the evolution of the neural architectures used by the frontier (open source) LLMs. It covers the Mixture of Experts trend, Sliding Window Attention, QK-norm for training stability, Pre/Post-Norm variants, Grouped-Query Attention versus Multi-Head Latent Attention, LayerNorm vs RMSNorm, GELU -> SwiGLU and ties those developments to popular models like Llama 3.2, Qwen3, Gemma 3 and niche entrants like SmolLM3 from the Hugging Face team.

A welcome open source contribution by Snowflake (by the authors of DeepSpeed not to be confused with DeepSeek), ALST is a long-sequence focused training stack, dealing with the resource challenges that come with long input sequence training with transformer model architectures.

Understanding Muon: 3 part series

A deep dive on Muon, if you haven't heard of Muon, it is a neural network optimizer (alternative to e.g. Adam) that stabilizes and speeds up training by focusing on the weight update effect on model output and constraining those to avoid explosive changes in the output (often the cause of training instability).

Want more? Follow me on X! @ricklamers